Current AI Safety-ism is rooted in bad Anti-Natalist ideas.

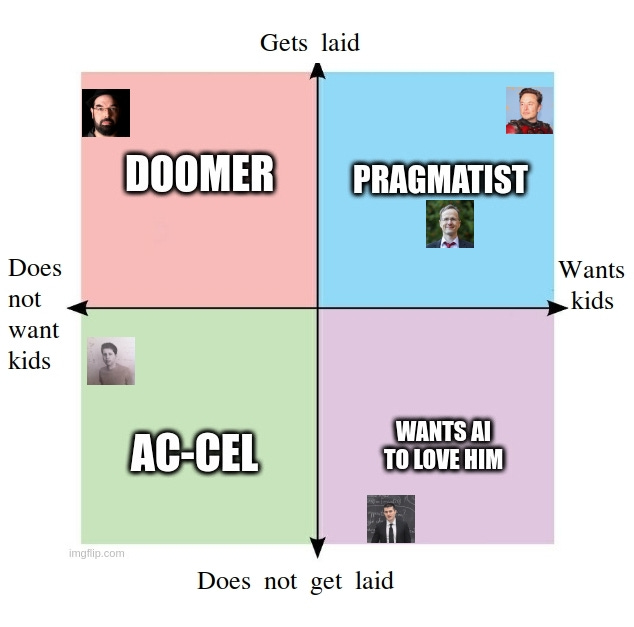

The following is a meme I recently posted:

This is the blog post explaining the left side of the meme.

AI debate keeps on going a lot on Twitter and elsewhere between some of the “AI safety people” who think AI will destroy the world (I am going to call doomers) and a mix of other positions ranging from “nah, it will be fine” and “let’s go even faster”.

I used to be a lot more of a doomer before I got married, so I am familiar with the position. When debating with doomers a lot of people tend to have trouble coming with well-thought-through arguments against “more intelligence = doom.” AI is, in fact, a dangerous technology, however, the entire “doomer memeplex” contains many surrounding assumptions and fundamental axioms that make them both overestimate the danger and underestimate certain techniques for its mitigation.

One of the core problems is anti-natalism. None of the prominent doomers have kids. They don’t seem to plan to. When people in that community do have kids or start thinking about this, they tend to want to find other communities that are more welcoming to them. Talking about pro-natalist perspectives or even saying how having a kid changes one’s perspective on human values and evolution earns only downvotes on LessWrong.

Why does this matter? This matters for many reasons, but one particular one for this post is that doomers have a limited view of how the majority of people think about life, which creates several very fundamental mistakes when they think about AI. The main problem is that doomers believe that human values have nothing to do with evolution or having kids. Thus, they believe that humans have “escaped” evolution’s control and therefore it is guaranteed that AI will escape human control.

An example of bad reasoning is here. I am not going to refute this point by point, nearly every statement is wrong.

So, let’s talk about the question correctly – how do “human values” relate to evolution? What is the model of “how humans behave?” and how much of this relates to “inclusive genetic fitness”?

Note, when we talk about “models of human behavior” these are never going to be exact scientific explanations like “orbital mechanics.” Rather they are statements about “averages” that don’t always hold true for every human.

Define a few important terms:

“r-selected" - r-selected species are those that emphasize high growth rates and produce many offspring

“K-selected” - By contrast, K-selected species display traits associated with living at densities close to carrying capacity and typically are strong competitors in such crowded niches, that invest more heavily in fewer offspring, each of which has a relatively high probability of surviving to adulthood

“inclusive genetic fitness” is a somewhat “nebulous term” that roughly describes the quantity of genes relevant to the person in the population as well as the evolutionary quality of individuals carrying them

“optimizing” - the process of increasing a variable, or a desire to increase a variable

“maximizing”, is a type of optimization, where you always want the variable to go higher and higher.

“satisficing”, is a type of optimization where you want the variable to increase above a threshold, but after that, you no longer care to increase it as much (you want the variable to be at least at 5, but you are somewhat indifferent if it is 5 or 6). Related to “decreasing marginal utility.” in economics.

The general point I am trying to make is:

People are “inclusive genetic fitness optimizers”

In other words, IGF is a large portion of most people’s value set. Now, it is notable that humans are K-selected compared to many other animals. In other words, humans care more about quality than most animals. It is possible that people in the past in low-density environments were “more-r-selected” and cared more about quantity than today. This means that “inclusive genetic fitness” for people doesn’t just mean the “number of genes in the next generation,” but also the “quality of said genes”. Humans can see "one step ahead" and know the rough prospects their children have in the dating market. "Resources" is not just money, it is also knowledge, beauty, etc. Men and women have varying perspectives on quality / quantity tradeoffs.

However, when I say “optimization,” I don’t necessarily mean “maximization.” Not every man is lining up in front of sperm banks. Most people seem to have a target number of kids and a target level of “taking care of them.” Doomers seem to take these examples of non-maximization and thus claim that human “values” have *nothing* to do with IGF, while in reality, it is merely a more constrained version of optimization. It seems that many people work on the “good enough” level of both quantity and quality rather than maximization. In other words, people tend to act as “satisficers.” There are multiple possible reasons for this:

It may just be hard for evolution to build a “maximizer.”

Maximization is a difficult task even in theory and predicting what exact circumstances maximize your IGF in the future is complex. Filling a nation with copies of oneself makes it more susceptible to a specific variant of a disease or internal conflict, for example. It might be easier for evolution to create a set of heuristics that add up to “limited” optimization. However, the notion of “limited” optimization is not the same as “no optimization,” contrary to doomer worldviews.

Limited self-trust in evaluating outcomes.

People might not be able to trust themselves to evaluate the exact dynamics of the dating market for their kids. Especially in times of rapid change, it might be more advantageous to give their children more autonomy and move themselves out of the picture. As such a lot of people don’t have the self-trust to enact “maximization” behaviors in the older ages.

Civilizational agreements against maximization

Some people may harbor maximization tendencies (Genghis Khan, people who donate to many sperm banks), however, society looks downs on many such behaviors. One can view civilization as an agreement between maximizers to forgo maximization tendencies and become satisficers instead. This works to prevent the most egregious ways that someone might go about increasing IGF. However, these agreements and perceptions of them are not perfect. Some people and societies incorrectly view “wealth” as a breach of the “anti-maximization” agreement, for example. However, as civilization becomes more complex, we can allow more sophisticated agreements between people.

The general point is that while behavior is the best evidence of values, people can sometimes constrain their “full value” expression as a point of agreement and towards greater cooperation.

Density

It seems that the density of space seems to have an impact on fertility even in mice and other rodents. In people, cities tend to have lower birth rates than less dense population centers. Thus, density can be seen as a signal that a population is reaching a “carrying capacity,” and adding a lot more people could become counter-productive. Lots of people worry about “over-population;” it is never clear if this is a genuine concern or covering up something else. “Group selection” is a controversial theory in evolution, however, density decreasing fertility is an interesting cross-species data point. Of course, "density” is a signal and signals are not always accurate.

Once again, these are hypotheses of why many people differ from “maximization” model and prefer what looks like “limited” optimization. However, there exist people, such as the doomers themselves, who seem to be hard anti-natalists and therefore themselves somewhat misaligned with broader human values.

Now, how does evolutionary theory explain anti-natalism? It’s somewhat of a puzzle, really, I suspect it’s a mix of “runaway virtue signaling processes”, “misunderstanding of anti-maximization signals of civilization” and wrong “gut-level” understanding of density. I hope to expand on these in future posts.

Does this particular problem mean that the doomer position is always wrong and that AI is perfectly safe? No, AI is still a dangerous technology. Anti-natalism is pretty common in “accelerationist” positions as well, although it tends to manifest in different types of errors.

The main takeaway from this is that a community with no kids (and is anti-kids) is likely heavily subject to anti-human signaling spirals. It will never be capable of understanding what human values are and are not and what mathematical models will achieve them vs which ones will not.