AI as a Civilizational Risk Part 3/6: Anti-economy and Signal Pollution

Post-publishing note: I originally published this essay as an entry in “FTX Future fund” worldview prize. I have not done much research into FTX prior to the submission. Less Wrong’s reception of my posts was lukewarm. Shortly after, FTX blew up, the entire FTX Future fund team quit, and the fund ceased to exist. Generally speaking, the fact that people in effective altruism highly involved with FTX didn’t notice or chose not to speak up about their concerns is a pretty big update in that anti-economy (“techno-fraud” and scams / legitimate business crossover) as a general category is more common than is expected. It’s likely more common than the general public or rationalists suspect and will only increase in commonality as certain forms of AI become more prominent, as I argue below.

Any social problem in your society can and will be made worse by adding AI. Just because we tolerate a problem without society collapsing does not mean we can tolerate an AI-enhanced version of it.

Spam as an example of Anti-Economy

We can talk about the problems of behavioral modification in economic terms. Propaganda and engagement AIs are centralizing benefits and distributing costs, a well-known economic issue of externalities. The tools we have created do not price in those externalities. The externalities may be so significant that large companies are potentially net negative for humanity if they use too many behavior modification AIs. I like to call this the anti-economy. A new term highlights that the magnitude of distributed costs has become so high as to create its own "externality economy."

The most obvious example of the anti-economy is spam. Spam and its related issue of scams use a lot of automated tools. Many of them are not AIs yet, but they will become AI in a reasonably short time frame. They centralize a tiny benefit to their creators, usually through illegal or borderline illegal means, and they destroy a lot of people's valuable attention and time, thus distributing massive amounts of cost. The entire mediums of phone calls and paper mail have significantly underperformed compared to their potential utility due to a large portion of unrecognized phone calls being spam. Spam phone calls can have deadly consequences.

Anti-economy of online bots, scams, and spam is a clear net negative for humanity. In addition, many legitimate or quasi-legitimate businesses resort to spam or scam-like tactics. Hidden service costs and bot-driven fake reviews create a wrong perception of product quality. The economy intersects with the anti-economy in that something might not be a total scam and be a product. However, because this product's creator has solicited some narrow AI's help to write fake reviews, it is crossing over into borderline scam territory. The fake reviews, articles, and news are drowning signals of actual knowledge with noise. They are parodied here. Signal pollution is reducing real economic output, and it is potentially destroying actual physical and mental health.

These spam algorithms will increasingly utilize GPT-like AIs, thus imposing further costs on everyone. Fake emails and scams already have a non-trivial negative impact. However, the issues of distributing costs through AI are likely to come from large companies. Behavior modification by either the techno capital seeking engagement or governments seeking to suppress any negative sentiment about them will come at ever-increasing costs to both individuals and the social cohesion of society. It is terrible for the real economy if people lose trust in fellow men and lose trust in society and have difficulty creating valuable economic enterprises unless they utilize existing strong familial bonds.

Society cannot convince the spammers that their algorithm should be safe. Spam detection algorithms must be more sophisticated and aligned with human values. However, that requires platforms that host products and create spam detection tools to not optimize strange metrics or promote hyper-partisan ideologies. It is not a good sign if spam detection becomes another political issue. Once again, proper solutions demand social cohesion from the underlying software developers, proper philosophical research into safe utility functions to optimize, and game/decision theory research to allow spam detection to function under consistent attempts to subvert it. Some of these may be more accessible versions of research for aligning artificial general intelligence, such as decision theory.

Possibility of Defensive AIs and Multi-polar scenarios

Could Open AI's original idea of giving everybody an AI as a defense mechanism improve outcomes by reducing the concentration of power? This idea would not necessarily mean having any AIs be well-aligned but for people to share power and control over them. Is it possible for narrow AIs that individuals ran to counteract behavioral modification AIs of techno capital? Imagine a "defensive AI" which browsed the net with you or your kids, carefully flagging inappropriate, hostile or too much "out of distribution" content. It could label things as "having destructive potential" to your mind, re-order internet information to reduce your stress levels, or tell you to turn it all off. These are possible in theory but very hard in practice. Defensive AIs are more challenging to create than offensive AIs. Defensive narrow AIs will likely need the same mathematical and philosophical insights for safe AGI. For example, if one were to train a defensive AI with existing techniques, one would need well-labeled data on what mental states are better than others or what types of personal modifications are "good" or "bad." This question is political and would be complicated even if it was not. Suppose we do not have defensive AIs protecting people from behavioral modification. In that case, this is another cause for concern and evidence that we are not on the path toward a safe AGI.

"Defensive against AI Software," if possible, cannot be a purely individual technology. Mental health is a group phenomenon. Social cohesion will need protection that is separate from individual protection. I have been researching ideas presented in this essay for a couple of years now, specifically this one. I expect that a piece of software for correctly understanding and thus aligning with social cohesion does not need to have an "optimization nature" or be an AI. Instead, it might only need to have a "contractual nature," similar in some ways to crypto-currency designs. By analogy, one can view Bitcoin as a "contractual" defense mechanism against the hyperinflation of fiat currency. "Contractual" does not mean "marketplace-like" but rather a broader term that includes marketplace algorithms and algorithms such as PageRank. Ideally, this algorithm would understand group relationship dynamics and local social cohesion. It will need to know what kind of group dynamics are "healthy" or "unhealthy" at a mathematical level. TrustRank, an algorithm I designed for my startup (YouTiki's) ranking, is one movement towards a contractual understanding of these issues. However, it still requires research before I can scale it for arbitrarily connected social graphs rather than the tree-like social graph of YouTiki. My current understanding is that since engagement maximization is closely correlated to the opposite of human utility, making something marginally more beneficial than Twitter ranking is a trivial problem. Simply borrowing search engine techniques, such as running PageRank for people and sorting content by usefulness, is likely better. However, making something that truly protects social cohesion and is game-theoretically stable is tricky, but I expect TrustRank will form the core of whatever this algorithm will be.

OpenAI's original announcement spawned arguments about whether a scenario of multiple AIs competing with each other is safer than a single polar scenario, where a single AI has a decisive strategic advantage. These arguments have spanned much debate, specifically around what existing reference class AI should go in. Should we compare AI to guns or cars and make it available to everyone, or should we compare it to nukes and only make it available to a handful of nation-states?

My reference class idea is to compare narrow behavioral modification AIs to pollution. The general public understands pollution as an unintended by-product of a manufacturing process. We can think of AIs as polluting not the physical world but rather the *signal space* that people use to communicate.

The capacity for this signal pollution is inherent in the architecture of most AIs. Most AIs map inputs to outputs in a statistical manner. Some of these outputs, such as meaningful text, are signals meant to represent something else about the speaker to the receiver. When the creator of such a signal is necessarily an AI, the chance that this representation is proper is low. The signal gets de-coupled from its meaning and can no longer serve its original purpose. Signal pollution is similar to phone calls from unknown numbers always being spam.

The large-scale destruction of signals pushes people towards creating more expensive signals and economic losses. People who still believe in a signal have trouble epistemically relating to people who do not. The pollution of signals creates a world where the "meaning" of words or entire mediums becomes less and less trustworthy. This pollution can happen both if AI is generating signals and also if AI is selecting the worst examples of human behavior or altering "signal distribution." If people begin mistaking Twitter's popular users' regular hostility and controversy for daily life, they lose the signal of how responsible and mature people sound.

There are examples of multiple competing factions of behavioral modifications reducing each other's worst excesses. Facebook engagement AI may wish to block bots created by governments who intend to radicalize people. AIs optimizing their utility functions can divert from each other just as much as they individually divert from human values. Given that an individual AI generally acts upon the world by setting its utility function to be as high as possible and other variables to be as low as possible, AIs are, in a sense, competing with each other. Their setting of each other's variables to be lower might cause a reduction of each other's *worst* excesses. However, since each of the AIs is still centralizing benefits and distributing costs, they are all parts of the anti-economy. The overall utility function represented by their combination is unlikely to have much to do with human values, and their total impact is negative. AI competition is not a reliable method to keep them safe unless most of these are already reasonably aligned "defensive AIs."

The perspective of "distribution costs and centralizing benefits" is another point against the flawed argument of "we can just turn off the AI." If we look at the narrow behavior modification AI, the people who control them do not want to turn them off. Moreover, the people "controlled by" the AI do not necessarily want to turn them off. Even though, if you study those people and block their access to the AI's modifying behavior, their health and mental health might improve. So, they might retroactively believe that what they were doing was wrong, but at the moment, they may need to be sufficiently motivated to turn off the influence. A third party who understands the situation and controls a more substantial power than the AI controller could force them to turn it off. However, this requires a governmental or warlike stance toward the AI controller and is thus a breeding ground for conflict.

Economic impacts of Centralizing Benefits and Distributing Costs

Centralizing benefits and distributing costs is not a new problem. This problem will be reflected in socio-economic metrics if social cohesion becomes lower than now. Suppose trust in most products becomes low and bad products outcompete good products due to fake advertising. In that case, the ability to extract value from these good products becomes low, and actual economic output could decline. Moreover, if they successfully suppress discontent, governments will become freer to centralize benefits and distribute costs in historically common ways.

In previous times this could result in hyperinflation. As mentioned in part 1, hyperinflation is historically one of the most likely modalities of collapse and is present at non-collapse transitionary periods. Several early economic signs point towards this possibility in the West. AI's involvement in decreasing social cohesion and increasing the anti-economy furthers this particular possibility compared to historical priors. Low social cohesion between elites and the general population means the elites do not care if they over-print money and distribute the cost to currency holders. Also, the government's desire and ability to suppress simple challenges to their legitimacy using narrow AIs means they are less afraid of popular discontent and thus feel freer to print money. Enormous government spending and a desire to financially reward one's voters point to a desire to print money. Narrow AI pushes for hostilities between the Great Powers. These hostilities reduce the desire to keep the dollar as a reserve currency. Anti-economy's concentration of benefits and distribution of costs means fewer vital goods are available to trade compared to "anti-economic" production of junk content. A lot of AI-enabled civilizational risk directionality points to higher inflation. Higher inflation will become a wedge issue, lower social cohesion even further, and potentially create a negative hyperinflation and hostility feedback loop.

Thus, hyperinflation is likely the scenario by which Western Civilization will collapse. I would give this outcome 31% by 2033 (without any conditions). Hyperinflation is more likely than civil war (~5%) or a drop in life expectancy to 65 years (~3%), but it can accompany both simultaneously. If the anti-economy continues unchecked, civilization does not invest in safety research on AGI and does not invest in deploying narrow defensive AI, then the risk for all three goes up every year after 2033. Since optimizational power points in a single direction, the collapse of social cohesion under this pressure is only a matter of time. The forces on destroying social cohesion are moving at the speed of algorithms, and pressures attempting to build it, if any, move at the speed of genes. I expect the probability of collapse of the West to be ~92% by 2070, conditional on the absence of proper safety redirection.

Life expectancy as a deciding metric considering both positive and negative impact

It is worth considering non-behavioral modifications AIs and their potential impact. Companies will use many AIs to automate or speed up large parts of the economy, such as driving or manufacturing. Coding and art creation are likely to be sped up through AIs such as Stable Diffusion or Co-Pilot. Many people point to these as plausible avenues of civilizational improvement. Kurzwellian or accelerationist perspectives point to this as a reason to feel optimistic and increase the rate of technological innovation. The positive impact of AI seems true only if one looks at a subset of current technological innovation. Using AI to manufacture things more effectively creates something net positive for humanity. Right now, AI can drastically speed up the creation of art and boilerplate code. Self-driving cars will increase productivity in the following decades and reduce deaths from accidents, which range from 35k to 50k per year. Using AI to improve advertising for a bad product is a net negative for humanity. Particularly harmful products are extremely invasive medical interventions, which are counterproductive and require further medical interventions to undo them. Overspending on healthcare is one example of something that may improve GDP but negatively affect important overall metrics such as life expectancy.

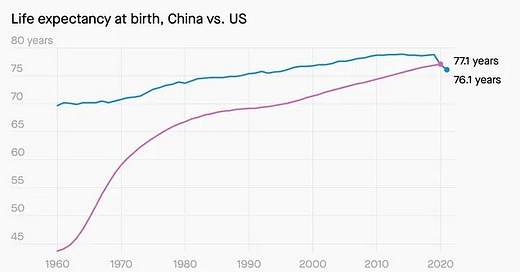

Thus, if narrow AI continues forward unchecked, which is very likely, we will see further diversions of life expectancy and GDP per capita / PPP, especially in the West. Life expectancy and log GDP per capita have a nice linear relationship when compared across countries. However, even in 2018, the US life expectancy was below this trendline.

GDP may rise to the cheerful cries of acceleration, but the actual human value reflected in people not dying will keep going down. The exact causes of this will be similar to what we see today. Deaths of despair will rise. People demoralized by hateful internet discourse and the absence of friends will turn to legal or illegal drugs or digital addiction or fail to take care of their health. Deaths of despair are already 2-3x higher than car accidents. From the perspective of reducing deaths, solving all car accidents has a lower upside than solving "deaths of despair." Other deaths that trace their origin to lower social cohesion will also keep increasing.

Behavioral modification of people towards digital addiction, advertising, and radicalization is more straightforward than setting up robust and safe molecular factories, especially in the next ten years. Despite the economic benefits of AI being potentially massive, the negative effects of removed social cohesion still overshadow them. For example, if an AI can find a way to 3D print expensive medical devices at home, this could improve many medical outcomes. However, it is easy for existing players in the field to purchase persuasion bots and fake news bots to make up or over-emphasize stories of new devices not working, thus causing the government to ban them. AI-driven status signals will influence people to look down on those who use innovative technology.

In trying to create a rough theoretic model of societal utility as a function of technology, time, and social cohesion, I expect technology to go up exponentially and utility derived from technology to increase linearly over time. However, social cohesion is a bounded variable. Its minimum value corresponds to a total war of all individuals against all individuals, which will have low utility regardless of technological level. Civil war and the formation of multiple states will likely happen before that point. In other words, each marginal decrease in social cohesion is more and more dangerous the less of it there is.

How do we understand in practice how strongly automation improvement and social cohesion destruction affect society? We cannot look at purely economic metrics since the anti-economy "contributes" to GDP. We need to look at harder to fake proxies for human utility, such as life expectancy. How has that been going? Badly

A significant drop in life expectancy is due to the COVID pandemic deaths. However, it does not account for the entire drop. Total Deaths in 2021 were 3.46 million, and total deaths in 2019 were 2.85 million. 460 thousand of 600 thousand excess deaths were due to COVID; the rest were due to other causes.

Questions about predicting the life expectancy trend depend on the reference class of the "COVID pandemic." If you consider it once in 100 years pandemic, you might dismiss the recent drop as one time. However, if you consider it as an instance of a class "manmade technology spinning out of control," this is an instance of a larger reference class that includes AI. The life expectancy trend in the next couple of years is a significant sign of social degradation outpacing technological growth. It is entirely possible that life expectancy in the US is on a downward trend right now and will not have a positive first derivate for decades, especially if the narrow AIs are left unchecked. One of my predictions is that life expectancy could drop to 65 years by 2033. I give this ~3% probability, less likely than hyperinflation but still enough of a concern.

While life expectancy may or may not be dropping in the next ten years, it is undoubtedly failing to rise as much as it could if we got behavioral modification AIs under control. While the country "as a whole" may have incentives to stop deaths of despair, these issues will continue due to centralizing benefits and distributing costs. The issues are hidden from the views of elites because their internal metrics or GDP are doing great, but they are not paying attention to significant increases in suicides and other deaths of despair. The low amount of discourse on Twitter around the 2021 life expectancy drop strongly indicates that the actual metrics of overall well-being are likely to be ignored.

I suspect the discourse focuses too much on AI's manufacturing and job replacement functions from the perspective of both risks and benefits. From the perspective of risk, focus on these types of AIs has given rise to the "paperclip manufacturing" meme or its more advanced cousin - "grey goo manufacturing." From the perspective of benefits, this has given rise to accelerationism, or the idea that AI is beneficial for the same reason the free exchange of goods is beneficial. There is some merit to these examples. Many AI advances will revolutionize certain parts of the economy. However, the free-market logic does not apply to not-fully consensual behavioral modification pushes.

If we look at overall health metrics, the potential and actual upsides of positive AI development in automation and manufacturing are weaker than the downsides of AI-enabled behavioral modification and decreases in social cohesion.

AI is not "developing a will of its own," a phrase mentioned by some people who misunderstand the problem. Behavioral modification AIs optimize the utility that developers programmed into them. However, in doing so, they decrease the utility in all other aspects that they are not looking at - enough to be a net negative for society. A similar problem has been pointed out with AGIs, but just because the collapse happens slowly does not mean humanity can quickly stop it.

Given the current trends, there will quickly come a point when AI no longer creates technological benefits from production quickly enough to counteract the negative side effects of behavioral modification. If we see further decreases in life expectancy may mean this point has already passed for the US.

Concentration of power.

People have raised concerns that the automation of work AI will create a concentration of economic power. The debate is likely unsettled and depends on many factors. Pundits have claimed that economic power will be more concentrated after any invention in the last 50 years. According to the media, computers, smartphone, the internet or technology in general might increase inequality. The actual GINI coefficient of the world (not broken down by individual countries) has actually decreased (meaning lower inequality) 1990-2010. However, it did increase in many individual countries, including the US.

In the Middle Ages, economic or political power became more concentrated due to the stirrup (Medieval Feudalism and the Metal Stirrup) and less concentrated due to the invention of the musket. Technology as a whole likely does not have an evident tendency for centralization or decentralization, and it depends on several factors. In particular, with job-assisting AI, whether it provides a concentration of power or decentralization of power likely depends on the threshold of hardware required to run useful AIs.

One scenario is if we have AIs which require a large data center to train run, for example, OpenAIs DOTA playing AI, then we will see more power centralization. If most AIs have to be large, those who create those large AIs will likely reap many benefits.

Another scenario on the opposite end of the spectrum is if training and deploying AIs require little hardware but a deeper understanding of problem domains, those AIs will cause a distribution of economic power.

A third and most likely scenario is that complex AIs require a lot of hardware and data to train but only a little hardware to use. "Hard training, but easy use" increases the power of large companies and enterprising individuals compared to legacy businesses that have trouble training or using AIs. AI will likely create different types of work: prompt engineering, training data creation, or data evaluation. Each of these could become more valuable as AI has become commoditized. The more AI becomes commoditized and trained by many companies, the more valuable the economically symbiotic activities will be.

Thus, claiming AI for economic production as either centralizing or decentralizing overall is challenging. AI presents a high and low alliance versus the middle. "High" is the creators of AI and data center operators, and "low" are people who supply training data, with the "middle" being more traditional capital structures unable to incorporate the technology. Overall economic concentration or decentralization of power due to AI production improvements is less likely to cause or mitigate civilizational risk than the concentration of power related to AI's ability to modify people's behavior.

The above statements are somewhat contingent on how much "rent-seeking" as a force captures the benefits of AI. If the taxation of AI and the costs of doing business grow along with productivity, then the benefits would largely be captured by governments and their political clients. Specifically, in nations with low social cohesion, the politically connected class has no interest in passing surplus economic value to either the people or politically-disconnected industrialists. This factor dampens the magnitude of any inherent notion of centralization vs. decentralization outside of the government realm. It shifts the situation to the status quo or towards a conflict over governmental resource allocation. Overall, the metaphors of AI in manufacturing or AI in job replacement are not as useful for people pointing out potential failure modes of AI. In the absence of social cohesion, much of AI-enabled economic productivity is unlikely to find its way back to the people and be reflected in life expectancy.